Wikipedia Database Size

Database Size Sometimes we need to know fast the size of our database if you are lazy just compile this function in every database that you have and execute it. The maximum size of the database files defaults to 8Gb this can be changed with the --backend-store-size.

![]()

Semiconductor Device Fabrication Progress Of Miniaturisation And Comparison Of Sizes Of Semiconductor Ma Semiconductor Semiconductor Manufacturing Comparison

8 - 1 page is 8 KB 1024 - 1MB is 1024 KB.

Wikipedia database size. Ingman M Gyllensten U. Includes articles and categories pertaining to various dimensions. - GitHub - msfashaWikiExtractor-1.

To determine the internal file size information for a single table you can execute the following statement in SQL studio or database studio. SXMSCLUP SXMSCLUR and not much control after running archiving deletion and other housekeeping activities. This requires PHP to have zlib support enabled which is usually the case.

This document will be helpful to address inconsistencies in ArvDel process in. Wikimedia provides public dumps of our wikis content and of related data such as search indexes and short url mappings. Part of these data are available in the first release of Wikistats 2.

Extracts and cleans text from Wikipedia database dump and stores output in a number of files of similar size in a given directory. Accepts any UTF 8 character. No padding Same as long var char.

Stores up to the specified number of characters. In the above result all three sizes are in terms of data pages Size MinSize is 1280 pages corresponds to 10 MB and MaxSize is 12800 which is 100 MB. Pads with trailing spaces for shorter strings.

This will report size information for all tables in both raw bytes and pretty form. LANGUAGE sql. Dimension of the NGarai.

Human Mitochondrial Genome Database a resource for population genetics and medical sciences. CHECK TABLE WITH SHARE LOCK or even better CHECK TABLE UPDATE COUNTERS check more information and availability in note 1315198. Beware that text will be completely unreadable if you later move to a server without the PHP.

Dimension Z Arnim Zola Dimension Z Living Eraser Dimension Zee. Nucleic Acids Research 34 D749-D751 2006. PI production database size is growing large tables.

Select your saved database and voilà. This is the final release of Wikistats-1 dump-based reports. By default MediaWiki saves text into the database uncompressed.

Extracts and cleans text from Wikipedia database dump and stores output in a number of files of. Redirects Jan 31 2019. This willhelp to ensure that everyone can access the files with reasonable download times.

Clients that try to. The size of the text table entries for new edits can be reduced by about half by enabling wgCompressRevisions. Extracts and cleans text from Wikipedia database dump and stores output in a number of files of similar size in a given directory.

Human mitochondrial genome database For a description see. If you are reading this on Wikimedia serversplease note that we have rate limited downloaders and we are capping the number of per-ip connections to 2. The LMDB backend can be enabled when provisioning or joining a domain using the --backend-storemdb option.

For the purposes of this database dimensions are defined as planes of existence outside the normal spacetime continuum but are often tangentially linked to a specified reality. SELECT pg_size_prettytotal_bytes AS total pg_size_prettyindex_bytes AS index pg_size_prettytoast_bytes AS toast pg_size_prettytable_bytes AS table FROM SELECT total_bytes-index_bytes-coalescetoast_bytes0 AS table_bytes FROM SELECT c. Stores exactly the number of characters specified by user.

The LMDB back end permits database sizes greater than 4Gb. Usually scheduled PI housekeeping jobs ArvDel will auto cleanup by default from t-code SXMB_ADM. 2GB for 32 bit OS.

CREATE OR REPLACE FUNCTION sizedb RETURNS text AS SELECT pg_size_pretty pg_database_size current_database. MitoVariome A freely available database of 5344 mtDNA sequences from around the world. You now have access to Wikipedias library of articles.

The dumps are used by researchers and in offline reader projects for archiving for bot editing of the wikis and for provision of the data in an easily queryable format among other things. Extracts and cleans text from Wikipedia database dump and stores output in a number of files of. A dimension may be a universe which contains a virtually infinite amount of space or a pocket dimension which is clearly finite and often relatively small.

1280 128081024 10 MB 1280 - total no of pages. Dimensions are not to be confused with parallel andor alternate realities. - GitHub - apertiumWikiExtractor.

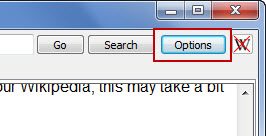

A dimension is a portion of reality containing space time matter and energy and is separated from other dimensions by some physical difference in these elements. In the top right corner you will see an option tab. 2GB for 32 bit OS.

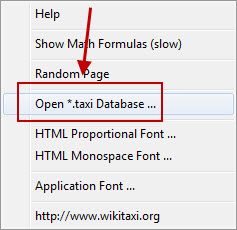

Combined size of all articles incl. Click on this and scroll down to Open taxi Database.

![]()

List Of Examples Of Lengths Wikipedia

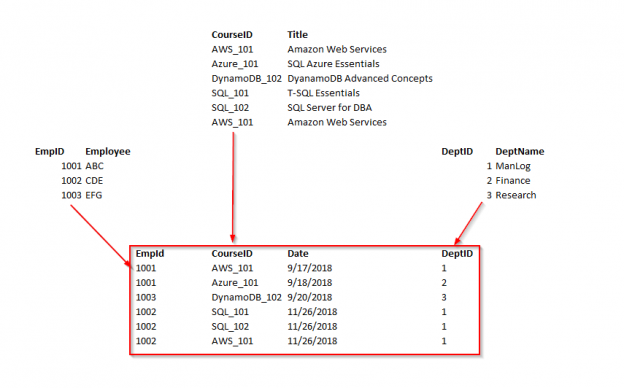

What Is Database Normalization In Sql Server

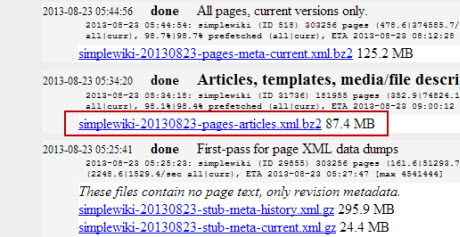

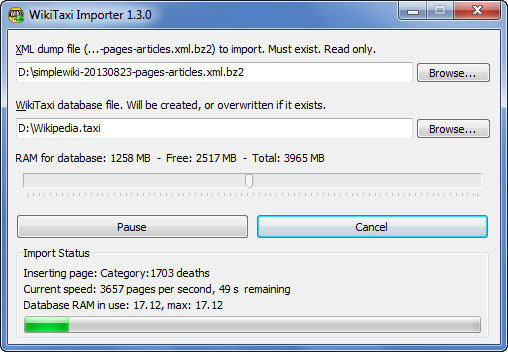

How To Download And View Wikipedia Offline

How To Download And View Wikipedia Offline

How To Download And View Wikipedia Offline

How To Download And View Wikipedia Offline

How To Download And View Wikipedia Offline

Posting Komentar untuk "Wikipedia Database Size"